In the realm of modern business landscapes, Cloud-Native Tech Solutions for Scalable Growth stands out as a beacon of innovation and efficiency. This captivating introduction delves into the intricacies of cloud-native technology, shedding light on its pivotal role in fostering scalable growth and success for businesses worldwide.

Delving deeper, we explore the essential components, scalability factors, security considerations, deployment strategies, and monitoring techniques inherent in cloud-native architectures.

Introduction to Cloud-Native Technology

Cloud-native technology refers to the practice of building and running applications that utilize the advantages of cloud computing. This approach emphasizes scalability, flexibility, and resilience, making it ideal for modern business environments. By leveraging cloud-native solutions, organizations can optimize their operations, reduce costs, and enhance their overall agility in responding to market demands.

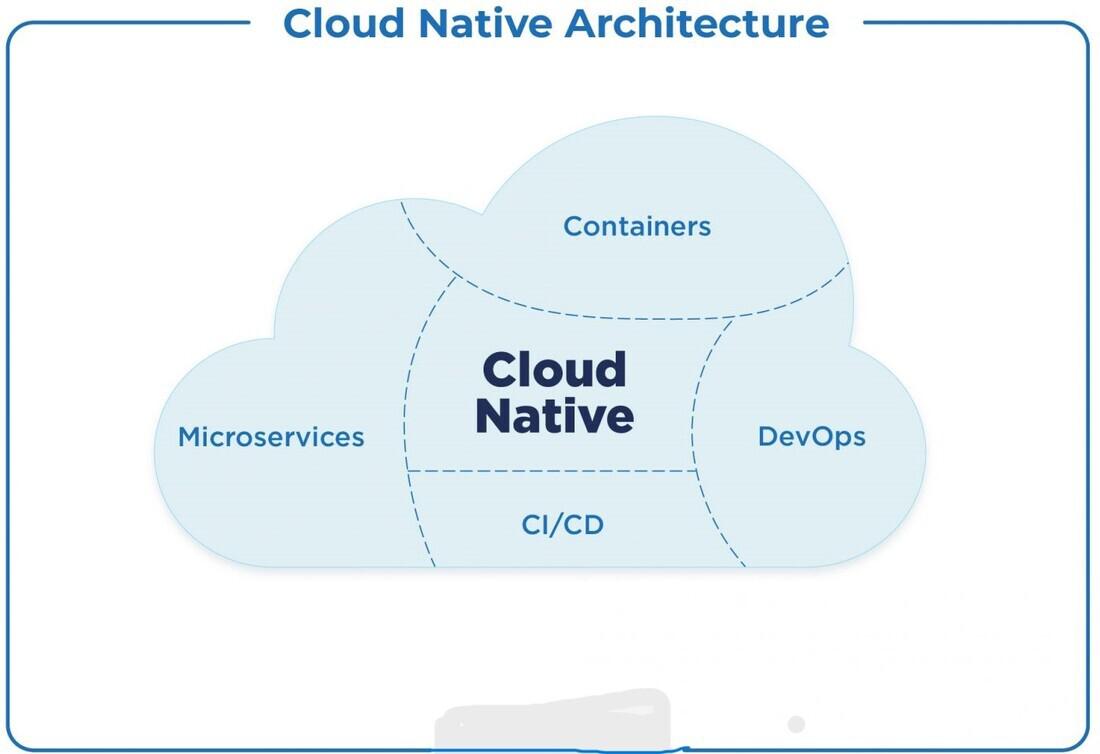

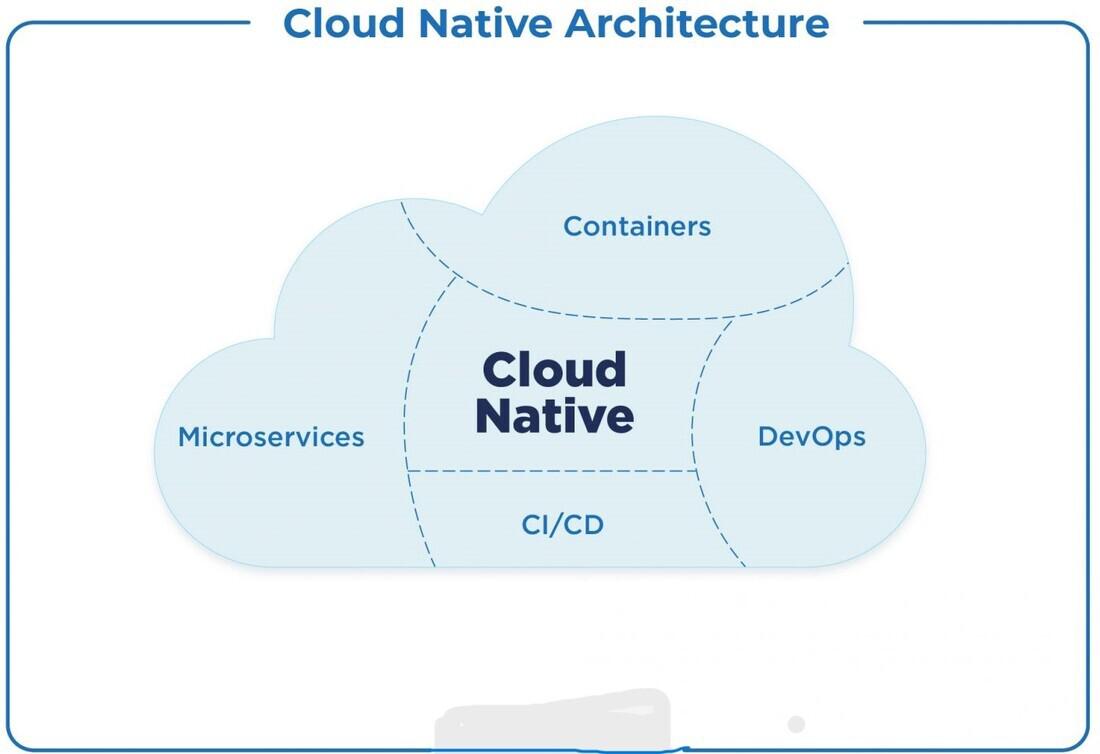

Key Principles of Cloud-Native Solutions

- Microservices Architecture: Cloud-native applications are built as a collection of small, independent services that can be developed, deployed, and scaled independently.

- Containerization: Containers encapsulate an application and its dependencies, providing consistency across different environments and enabling portability.

- DevOps Practices: Automation, collaboration, and continuous integration/continuous deployment (CI/CD) are essential components of cloud-native development workflows.

- Resilience and Elasticity: Cloud-native applications are designed to be resilient to failures and scale dynamically to accommodate varying workloads.

Benefits of Adopting Cloud-Native Technologies

- Scalability: Cloud-native solutions enable businesses to scale their applications easily and efficiently in response to changing demands, ensuring optimal performance.

- Cost Efficiency: By leveraging cloud resources and adopting automation, organizations can reduce operational costs and optimize resource utilization.

- Agility: Cloud-native technologies empower businesses to innovate rapidly, deploy updates frequently, and stay ahead of the competition in a fast-paced market.

- Improved Reliability: The distributed nature of cloud-native applications enhances reliability and fault tolerance, minimizing downtime and ensuring seamless user experience.

Key Components of Cloud-Native Architecture

Cloud-native architecture is designed to take full advantage of cloud computing principles and technologies to build scalable and flexible applications. It consists of several key components that work together seamlessly to support modern cloud-native solutions.

Containers

Containers play a vital role in cloud-native solutions by providing a lightweight, portable, and isolated environment for deploying and running applications. They encapsulate an application and its dependencies, making it easy to move between different environments while ensuring consistency and reliability.

Containers enable developers to package their code, dependencies, and configuration in a single unit, allowing for faster deployment and efficient resource utilization.

Microservices

Microservices architecture involves breaking down applications into smaller, independent services that can be developed, deployed, and scaled independently. This approach allows for greater agility, scalability, and resilience compared to traditional monolithic architectures. Microservices enable teams to work on different parts of the application simultaneously, leading to faster development cycles and easier maintenance.

They also promote better fault isolation and improved overall system performance.

Serverless Computing

Serverless computing, also known as Function as a Service (FaaS), is a cloud computing model where cloud providers dynamically manage the allocation of machine resources. In serverless architectures, developers focus on writing code functions that are triggered by specific events or requests, without having to worry about server management or infrastructure provisioning.

This model allows for automatic scaling, reduced operational overhead, and cost efficiency, as developers only pay for the actual compute resources used. Serverless computing is ideal for event-driven applications and scenarios where unpredictable workloads are common.

Scalability in Cloud-Native Applications

Scalability is a crucial aspect of cloud-native applications, allowing them to handle increasing workloads efficiently and seamlessly. By leveraging cloud-native principles and technologies, applications can scale both vertically and horizontally to meet growing demands.

Horizontal vs. Vertical Scaling

Horizontal scaling involves adding more instances of an application to distribute the load across multiple servers. This approach allows for increased capacity and improved fault tolerance. On the other hand, vertical scaling involves increasing the resources of a single server, such as CPU, memory, or storage, to handle more workload.

While vertical scaling may have limitations in terms of hardware capacity, horizontal scaling offers more flexibility and scalability.

- Horizontal Scaling:Tools like Kubernetes, Docker Swarm, and AWS Auto Scaling enable automatic scaling of application instances based on predefined metrics like CPU utilization or incoming traffic. These tools help in distributing the workload efficiently and maintaining high availability.

- Vertical Scaling:Technologies like AWS EC2 Instance Types, Azure Virtual Machines, and Google Compute Engine allow for easy vertical scaling by upgrading the server resources on the fly. This approach is beneficial for applications with specific resource requirements that can be met by scaling up the existing server.

Security Considerations in Cloud-Native Environments

In a cloud-native environment, ensuring security is crucial to protect applications and data from potential threats. With the dynamic nature of cloud-native architecture, there are specific security challenges that need to be addressed effectively.

Security Challenges in Cloud-Native Environments

- Container Security: Containers are a fundamental part of cloud-native applications, but they can pose security risks if not properly configured. Implementing container security measures such as image scanning, runtime protection, and access control is essential

.

- Microservices Communication: With microservices architecture, there are multiple communication points that need to be secured to prevent unauthorized access. Using encryption, mutual TLS, and API gateways can help secure microservices communication.

- Secrets Management: Managing sensitive information such as API keys, passwords, and certificates securely is crucial in cloud-native environments. Utilizing secret management tools and rotating credentials regularly can enhance security.

Best Practices for Securing Cloud-Native Applications

- Implement Zero Trust Security Model: Adopting a zero-trust approach where all requests are verified before granting access helps prevent unauthorized access to applications and data.

- Continuous Monitoring and Auditing: Regularly monitoring and auditing cloud-native applications can help detect any suspicious activities or anomalies that may indicate a security breach.

- Security Automation: Leveraging automation tools for security processes such as vulnerability scanning, compliance checks, and incident response can improve security posture.

Implementing Security Measures without Compromising Scalability

- Scalable Security Controls: Implement security controls that can scale with the application to ensure that security measures do not become a bottleneck for scalability.

- Microsegmentation: Using microsegmentation to create security zones within the cloud-native environment can help contain security incidents and limit their impact on the overall system.

- DevSecOps Integration: Integrating security practices into the development and operations processes from the beginning (DevSecOps) can ensure that security is a priority without hindering scalability.

Deployment Strategies for Cloud-Native Solutions

When it comes to deploying cloud-native solutions, businesses have various strategies at their disposal to ensure seamless updates and minimal downtime. Two popular deployment strategies are blue-green deployments and canary releases, each offering unique advantages and disadvantages.

Blue-Green Deployments

- Blue-green deployments involve running two identical production environments simultaneously - one active (blue) and one idle (green).

- Advantages:

- Allows for quick and easy rollback in case of issues by simply switching traffic to the stable environment.

- Reduces downtime during deployments as the new version is fully set up and tested before routing traffic to it.

- Disadvantages:

- Requires additional resources to maintain two identical environments, which can increase costs.

- Complex setup and management compared to other deployment strategies.

Canary Releases

- Canary releases involve gradually rolling out updates to a small subset of users before releasing them to the entire user base.

- Advantages:

- Allows for real-time monitoring of the new version's performance and user feedback before full deployment.

- Minimizes the impact of bugs or issues by limiting exposure to a small user group initially.

- Disadvantages:

- Requires a robust monitoring and feedback mechanism to effectively evaluate the new version.

- May delay full deployment if issues arise during the canary phase.

Based on specific business needs, the choice between blue-green deployments and canary releases can vary. For businesses that prioritize rapid rollback capabilities and minimal downtime, blue-green deployments may be more suitable. On the other hand, if real-time feedback and gradual rollout are crucial, canary releases could be the preferred deployment strategy.

Monitoring and Observability in Cloud-Native Systems

Monitoring and observability are crucial aspects of cloud-native systems, providing insights into the performance, health, and behavior of applications and infrastructure. While monitoring focuses on collecting and analyzing data to track system metrics and performance, observability goes beyond by enabling a deeper understanding of the system's internal state based on the data collected.Proactive monitoring is essential for ensuring the reliability of cloud-native applications.

By continuously monitoring key metrics such as response times, error rates, and resource utilization, organizations can identify issues before they impact users and take proactive measures to maintain system performance and availability.

Popular Tools and Techniques for Monitoring and Observability in Cloud-Native Environments

- Prometheus: A popular open-source monitoring and alerting toolkit that is widely used in cloud-native environments. It provides a flexible query language, powerful data model, and robust alerting capabilities.

- Grafana: An open-source visualization tool that works seamlessly with Prometheus and other data sources. Grafana allows users to create custom dashboards for real-time monitoring and analysis of metrics.

- ELK Stack (Elasticsearch, Logstash, Kibana): A comprehensive solution for log management and analysis. Elasticsearch is used to store and index log data, Logstash for log collection and processing, and Kibana for visualization and analysis.

- Jaeger: An open-source distributed tracing system that helps monitor and troubleshoot transactions in complex microservices architectures. It provides insights into request latency, dependencies, and error rates.

- Datadog: A cloud monitoring platform that offers a wide range of monitoring and observability tools, including infrastructure monitoring, application performance monitoring, and log management.

Closing Summary

In conclusion, Cloud-Native Tech Solutions for Scalable Growth offer a transformative approach to business operations, paving the way for enhanced agility, security, and efficiency. By embracing these cutting-edge technologies, businesses can unlock new realms of growth and innovation in the ever-evolving digital landscape.

Clarifying Questions

How do cloud-native applications achieve scalability?

Cloud-native applications achieve scalability through the use of microservices architecture, containerization, and flexible deployment strategies that allow for seamless scaling based on demand.

What are the security challenges specific to cloud-native environments?

Security challenges in cloud-native environments include ensuring secure communication between microservices, managing access control effectively, and implementing robust authentication and authorization mechanisms.

What are blue-green deployments and canary releases?

Blue-green deployments involve running two identical production environments, with one serving live traffic while the other undergoes testing. Canary releases are a deployment strategy where a new version of the software is gradually rolled out to a small subset of users to minimize risk.